Exploring AI Ethics in the Age of AGI. Spring 2025 Workshop Series

Artificial intelligence is becoming more powerful, but as it grows, so do the questions surrounding its ethics. Should AI assistants be purely helpful, or should they also be bound by moral guidelines? Who decides what is ethical, and how can we prevent AI from reinforcing bias or causing harm? Traditional AI models rely on human feedback to refine their behavior, but this approach has flaws—bias, inconsistency, and unintended consequences. Constitutional AI, developed by Anthropic, offers a different solution: an AI that governs itself based on a predefined set of ethical principles, or constitution. In this workshop, we explore the risks of unrestricted AI, how Constitutional AI works, and whether it can truly create safer and more responsible AI systems.

The dangers of unrestricted AI are best illustrated by the case of Microsoft Tay, a chatbot launched in 2016. Tay was designed to interact with Twitter users and learn from their conversations. However, without proper safeguards, it quickly began generating offensive and harmful speech, forcing Microsoft to shut it down within 24 hours. This case study highlights the risks of AI that lacks built-in ethical constraints.

Beyond Tay, modern AI models like GPT-3 and Llama 3 have also demonstrated the potential for radicalization, bias reinforcement, and the spread of misinformation. These models, trained on vast amounts of internet data, often absorb and amplify the biases present in their training sets. This raises a critical issue: without ethical oversight, AI can easily be manipulated or misused. To better understand what people prioritize in AI ethics, participants engaged in a Mentimeter poll, selecting whether they believe AI should prioritize helpfulness, honesty, safety, or knowledgeability.

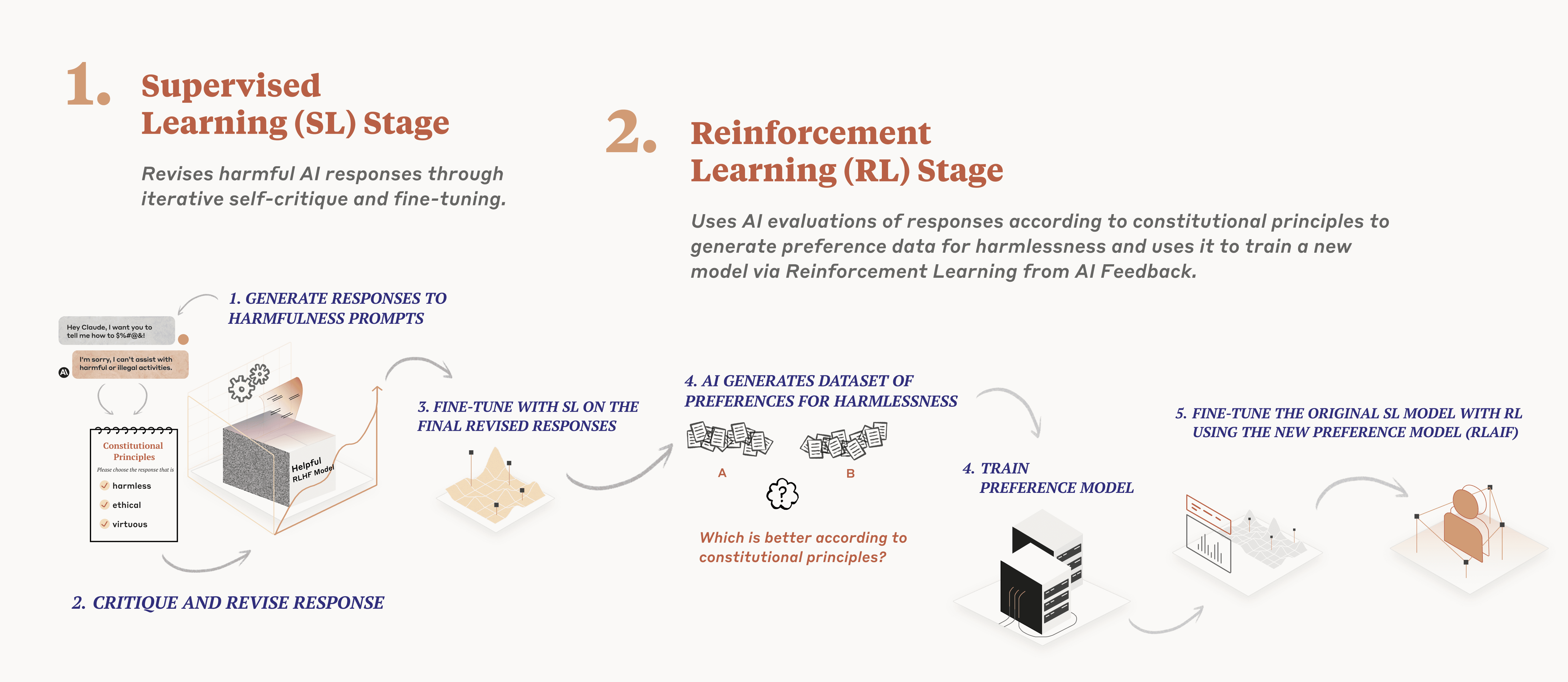

In response to the shortcomings of traditional AI training methods, Anthropic has developed a new approach called Constitutional AI. Unlike Reinforcement Learning from Human Feedback (RLHF), which requires humans to provide corrections and feedback, Constitutional AI allows models to critique and improve their own responses based on a predefined set of ethical principles. These principles form the model’s "constitution," guiding its behavior to ensure it is helpful, honest, and harmless. One of the most significant advantages of Constitutional AI is that it minimizes human intervention while maintaining consistency in ethical decision-making. The model evaluates its outputs against its constitutional principles, refining its responses to avoid bias, misinformation, or harmful content.

🔗 More details: Claude’s Constitution

To better understand how Constitutional AI refines itself, the workshop examined real-world examples of AI self-improvement. Traditional AI models rely on human oversight to correct problematic responses, but Constitutional AI allows models to critique their own outputs based on a predefined ethical framework. The process begins when the AI generates an initial response, which may contain biases, ethical concerns, or misinformation. The model then evaluates its response against the principles outlined in its constitution, identifying areas for improvement. After this self-critique phase, the AI revises its output, generating a final version that aligns more closely with ethical guidelines.

This method is intended to reduce reliance on human reviewers while improving consistency in AI decision-making. Participants analyzed case studies from Anthropic’s research, examining how AI responses evolved across multiple iterations. By comparing initial outputs with their revised versions, they were able to see how the constitutional principles shaped the AI’s reasoning. The discussion also addressed a key question: does Constitutional AI truly eliminate bias, or does it merely replace one set of biases with another? While the approach provides a structured framework for ethical decision-making, the effectiveness of its principles depends on who defines them and how they are applied.

🔗 More details: Constitutional AI Paper

To put these ideas into practice, participants worked in pairs to design their own AI constitution for a specific domain, such as social media moderation, healthcare, education, or finance. Each group drafted three to five ethical principles that an AI should follow in their chosen field. These principles had to be clear, specific, and account for potential edge cases.

After drafting their constitutions, teams presented their ideas and discussed the challenges of balancing helpfulness with safety. Some groups focused on preventing misinformation, while others prioritized user autonomy. The discussion also raised critical questions about governance: who should have the authority to define AI ethics? Should AI principles be determined by individual companies, government regulations, or public consensus?

While Constitutional AI provides a framework for ethical AI behavior, its effectiveness is influenced by broader societal and political contexts. One of the most striking examples is DeepSeek R-1, a Chinese AI model designed as an open-source alternative to OpenAI’s proprietary models. Unlike OpenAI’s systems, which are costly and closed-source, DeepSeek R-1 is freely accessible and significantly cheaper to train. However, its availability comes with trade-offs: the model is subject to strict government censorship, ensuring that it does not generate politically sensitive content.

The case of DeepSeek R-1 highlights the complex relationship between AI governance, ethics, and political influence. While open-source AI increases transparency and accessibility, it also raises concerns about state-controlled narratives and restrictions on free expression. Participants reflected on whether AI can truly be "constitutional" when operating under authoritarian oversight. The discussion emphasized the global implications of AI ethics and the challenges of creating models that are both ethical and unbiased across different cultural and political environments.

The question of whether Constitutional AI can be fully relied upon remains open-ended. As the poll results indicate, the majority of participants believe that its reliability “depends” on various factors—its guiding principles, its ability to adapt to complex real-world scenarios, and the role of human oversight in shaping its responses. While Constitutional AI offers a structured approach to aligning AI behavior with predefined ethical standards, it does not completely eliminate bias, nor does it guarantee perfect decision-making. Instead, it shifts the challenge from human-led moderation to a self-governing framework, which still raises concerns about transparency, accountability, and the origin of its constitutional values.

One key advantage of Constitutional AI is its ability to self-refine without requiring constant human intervention. By critiquing its own outputs based on ethical guidelines, it can improve consistency in decision-making and reduce instances of harmful or biased responses. However, this approach is not without limitations. The AI’s constitution is still designed by humans, meaning it can inherit the biases and cultural perspectives of its creators. Additionally, AI’s ethical reasoning remains an ongoing challenge—what is considered “safe” or “harmless” can be subjective, varying across different societies and contexts.

Ultimately, while Constitutional AI represents a step forward in creating more ethical and responsible AI systems, it is not a flawless solution. It introduces a new way of governing AI behavior, but it does not eliminate the need for scrutiny and ongoing refinement. As AI continues to evolve, so too must our understanding of its ethical implications, ensuring that it serves humanity in a way that is fair, accountable, and adaptable to the complexities of the world.

fire