An overview of LangChain's framework for building intelligent, context-aware AI applications, focusing on document retrieval, embedding, and dynamic agent-driven reasoning to enhance language model performance.

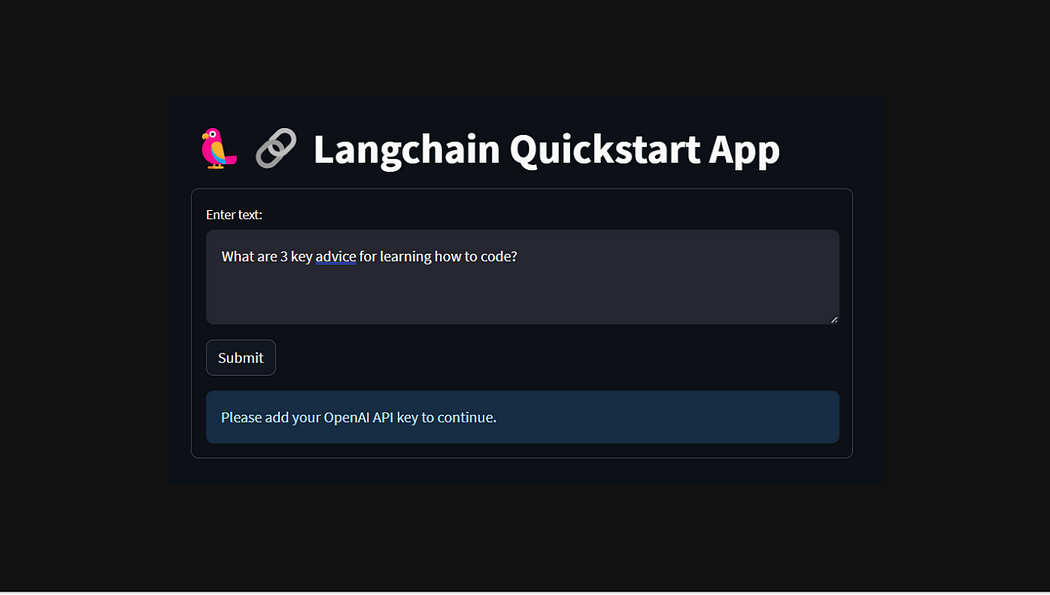

LangChain, a cutting-edge framework designed for the development of context-aware applications powered by language models, opens up new frontiers in the realm of artificial intelligence. At its core, LangChain is driven by the twin pillars of context awareness and reasoning, elevating the capabilities of applications that leverage its power. The framework includes LangChain Libraries, robust sets of Python and JavaScript tools. These libraries serve as the backbone, offering interfaces and seamless integrations for an extensive array of components. Within this framework, developers find a basic runtime environment, facilitating the amalgamation of diverse components into cohesive chains and agents.

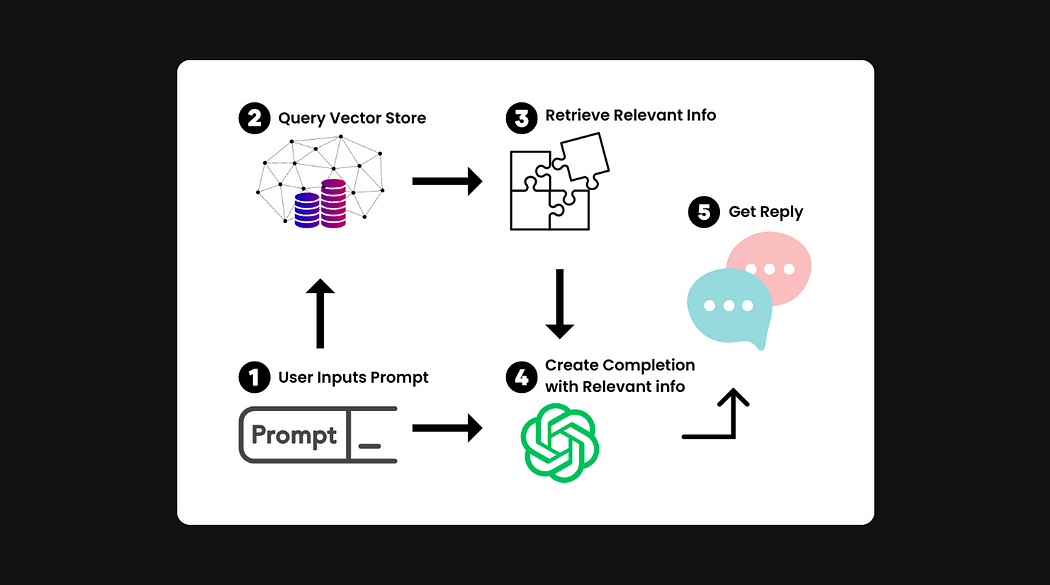

LangChain stands as a comprehensive solution for Language Model (LLM) applications that necessitate user-specific data beyond the model’s training set, addressing this challenge through Retrieval Augmented Generation (RAG). This intricate process involves retrieving external data and seamlessly incorporating it into the generation step of the language model.

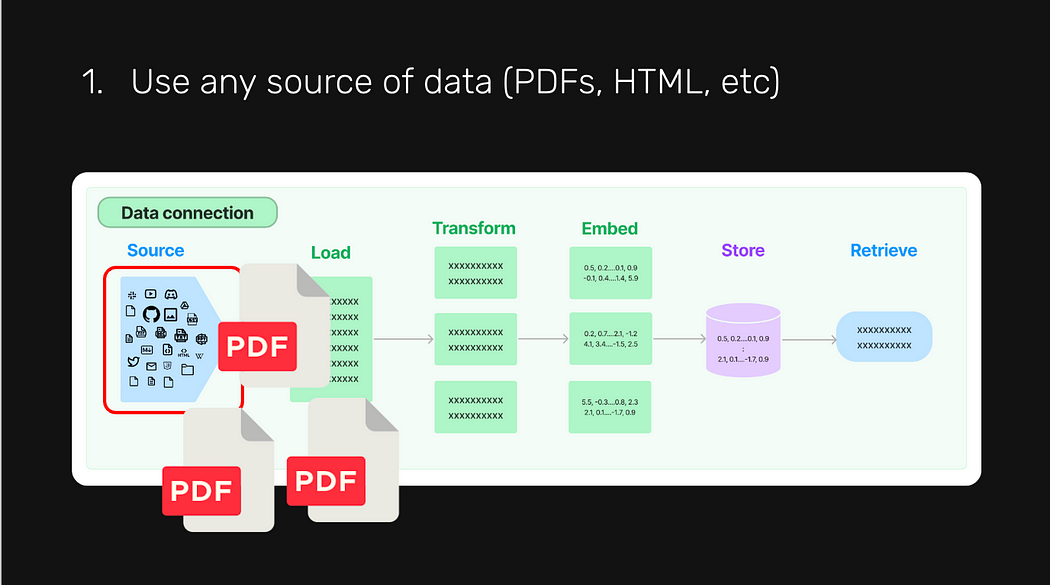

Within LangChain’s extensive toolkit for RAG applications, the documentation meticulously covers the retrieval step, unraveling the complexities involved. The foundation of this process rests on several key modules:

LangChain stands as a comprehensive solution for Language Model (LLM) applications that necessitate user-specific data beyond the model’s training set, addressing this challenge through Retrieval Augmented Generation (RAG). This intricate process involves retrieving external data and seamlessly incorporating it into the generation step of the language model.

Within LangChain’s extensive toolkit for RAG applications, the documentation meticulously covers the retrieval step, unraveling the complexities involved. The foundation of this process rests on several key modules:

Document Loaders: LangChain boasts over 100 document loaders, providing interfaces for diverse sources such as AirByte and Unstructured. From private S3 buckets to public websites, LangChain’s integrations support loading documents of various types (HTML, PDF, code) from a myriad of locations.

Document Transformers: Critical to the retrieval process is fetching only the relevant portions of documents. LangChain addresses this challenge through transformation algorithms, with a primary focus on splitting or chunking large documents into manageable segments. Tailored logic optimized for specific document types, such as code or markdown, is also provided.

Text Embedding Models: Creating embeddings for documents is another pivotal aspect of retrieval, capturing the semantic meaning of text for efficient similarity searches. LangChain facilitates integrations with over 25 embedding providers and methods, offering a range from open-source to proprietary APIs. The framework maintains a standard interface, enabling easy model swapping.

Vector Stores: The rise of embeddings necessitates databases for efficient storage and retrieval. LangChain integrates with over 50 vector stores, accommodating both open-source local options and cloud-hosted proprietary solutions. The framework ensures a standard interface, allowing users to seamlessly switch between vector stores.

Retrievers: Once data is stored, effective retrieval becomes crucial. LangChain supports various retrieval algorithms, offering value-added features like the Parent Document Retriever for multiple embeddings per document and the Self Query Retriever for parsing semantic queries from metadata filters. The Ensemble Retriever facilitates retrieving documents from multiple sources or using diverse algorithms.

Indexing: LangChain’s Indexing API synchronizes data from any source into a vector store, optimizing efficiency by avoiding duplicated content, preventing unnecessary rewrites of unchanged content, and saving computation resources by skipping re-embedding unchanged content. This not only enhances search results but also contributes to time and cost savings.

At the heart of LangChain’s innovation lies the fundamental concept of agents — a paradigm shift that harnesses the power of language models to dynamically guide the sequence of actions within applications. While traditional chains rely on a predetermined, hardcoded sequence of actions written in code, agents introduce a new era of intelligence by employing a language model as a sophisticated reasoning engine.

The essence of agents lies in their capacity to leverage language models to make informed decisions about which actions to execute and in what order. This departure from static, predetermined sequences introduces a dynamic layer of adaptability and intelligence to applications built within the LangChain framework.

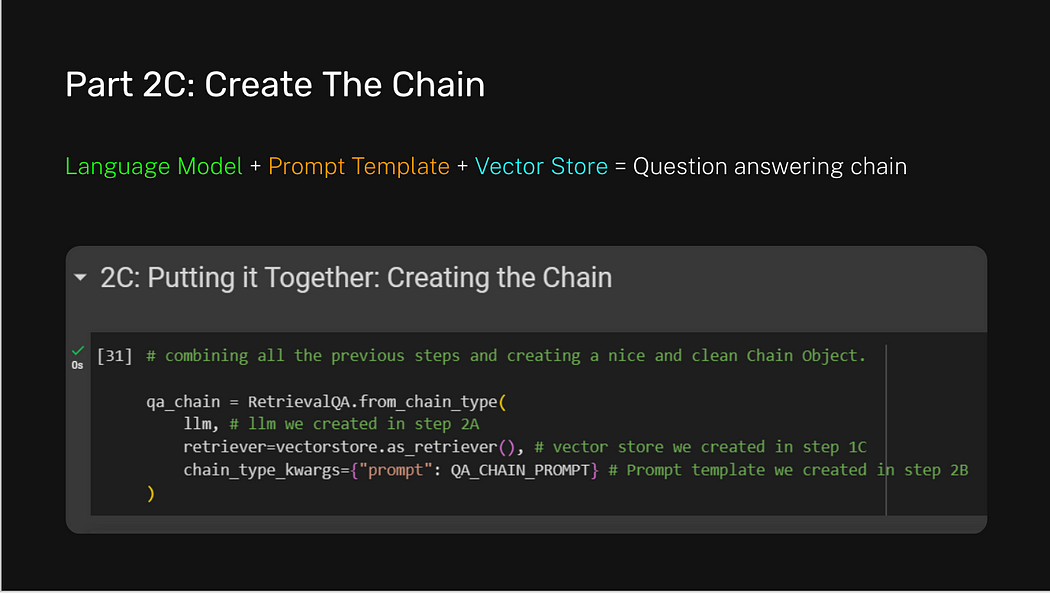

Components are called sequentially in a chain, combined to solve a specific task. Here’s an example of a chain in code.

In all, LangChain’s robust framework streamlines the intricate process of data retrieval for language models, providing developers with a comprehensive set of tools to enhance the performance and intelligence of their applications. We hope that this article helped you learn more about Langchain!