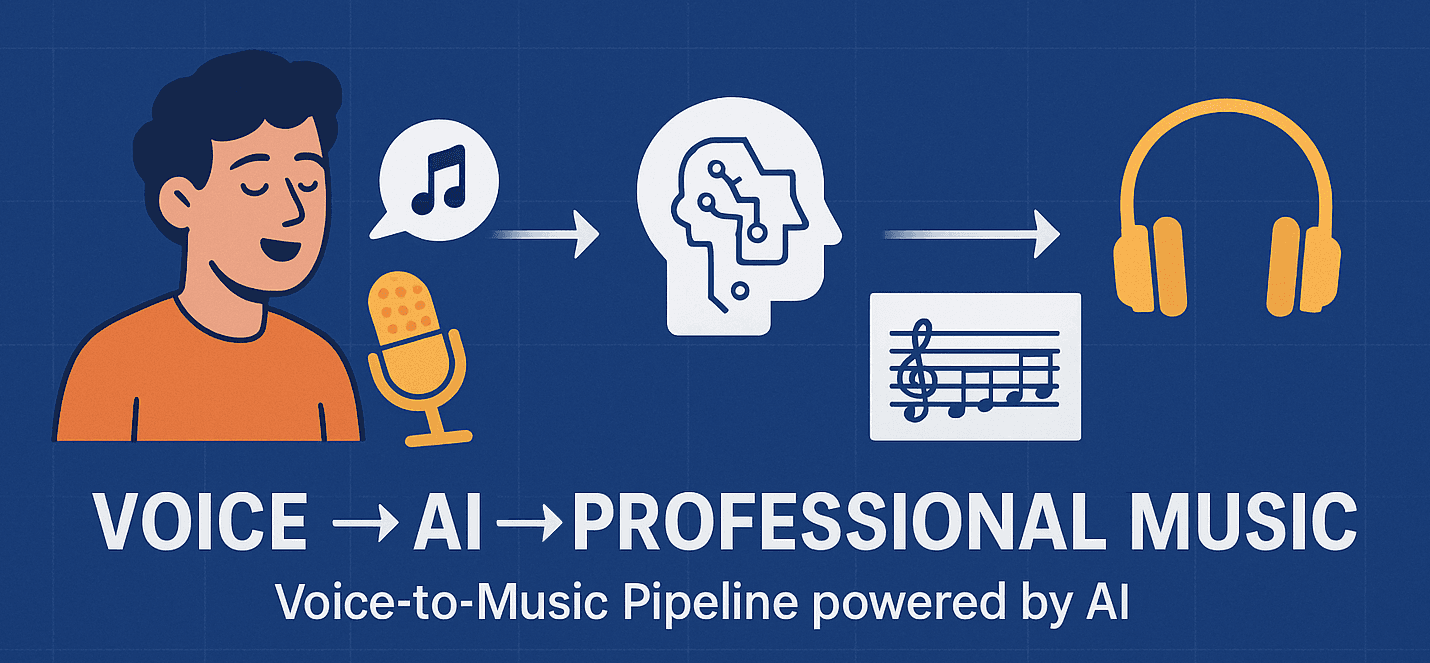

After producing music for years, I realized the learning curve was steep and unapproachable for the average person trying to get into music production. So I set out to create my own digital audio workstation (DAW) that uses AI to let anyone make music solely from their intuition.

The name Phonauto is inspired by the phonautograph, the earliest known device for recording sound. Just as the phonautograph first translated sound into a visual medium, Phonauto translates your raw music ideas—a hummed melody, a beatboxed rhythm, a described vibe—into structured, fully editable music.

Phonauto is a web application that functions as an intelligent Digital Audio Workstation (DAW). It's designed from the ground up to empower users who may not understand music theory to create music using the most intuitive instruments they have: their voice and their imagination.

Create: Hum a melody, and the hum2melody model transcribes it into editable musical notes. Beatbox a rhythm, and the beatbox2drums model converts it into a full drum pattern.

Arrange: Describe a style or mood (e.g., "add a funky bassline" or "create a cinematic pad"), and the Arranger AI crafts a full-band accompaniment that is musically aware of the existing tracks.

Refine: Dive deep into the composition with a suite of professional tools. Edit the underlying music code in a VS Code-powered editor, make precise adjustments in an interactive piano roll, or balance the final mix in a dedicated mixer panel.

Play: Hear changes instantly with a real-time audio engine that provides immediate feedback, making the creative process fluid and engaging.

Phonauto is built on a sophisticated three-part architecture, ensuring scalability and a separation of concerns:

•The AI Brain (Backend): Built with FastAPI, this is the intelligent core.

•Transcription AI: We developed two custom-trained neural networks. hum2melody uses a Harmonic CNN and a BiLSTM to achieve 98% pitch accuracy, while beatbox2drums uses a dual-CNN pipeline for onset detection and classification, reaching 99.39% accuracy.

•Generative AI: The Arranger is powered by Anthropic's Claude 4.5 Haiku model. Its creativity is guided by a complex prompting system that analyzes the music's context (key, tempo, chord progression, etc.) and provides the LLM with detailed instrument knowledge and strict DSL syntax rules. This ensures the generated music is contextually aware and musically coherent.

•The Studio (Frontend): Developed with Next.js, React, and TypeScript, this is the user's interactive music studio.

•Audio Engine: All audio is synthesized and scheduled in real-time on the client-side using Tone.js, providing instantaneous playback.

•Multi-Modal Editing: We integrated the Monaco Editor for a powerful code-editing experience of our custom music DSL, alongside a fully-featured Piano Roll and Timeline for visual editing. These views are bi-directionally synced.

•User Management: Supabase handles user authentication and cloud project storage.

•The Orchestra (Runner): A lightweight Node.js and Express server that acts as a high-speed interpreter. It parses the custom music DSL from the frontend and compiles it into a format that the Tone.js audio engine can play, offloading complex parsing from the client.

•State-of-the-Art Custom AI Models: We are incredibly proud of achieving 98% pitch accuracy for hum2melody and 99.39% classification accuracy for beatbox2drums. These high-performing, custom-trained models provide a reliable foundation for the user's creative workflow.

•Innovative Three-Part Architecture: The decoupled architecture of the Backend, Frontend, and Runner is a major technical achievement. It makes the system highly scalable, maintainable, and performant, with the client-side synthesis providing an instantaneous user experience.

•Context-Aware Generative AI: We successfully created an Arranger that acts as a true creative partner. By engineering a system that provides deep musical context to the LLM, we've built an AI that generates music that is harmonically and rhythmically aware of the user's work.

•Seamless Multi-Modal Editing: The bi-directional synchronization between the code editor, piano roll, and timeline is a key accomplishment. It provides a flexible workflow that caters to both code-oriented and visually-oriented musicians, which is rare in music software.

Maxim Meadow plans to continue developing the website as a passion project, adding richer features in a reliable and inclusive package.Pending